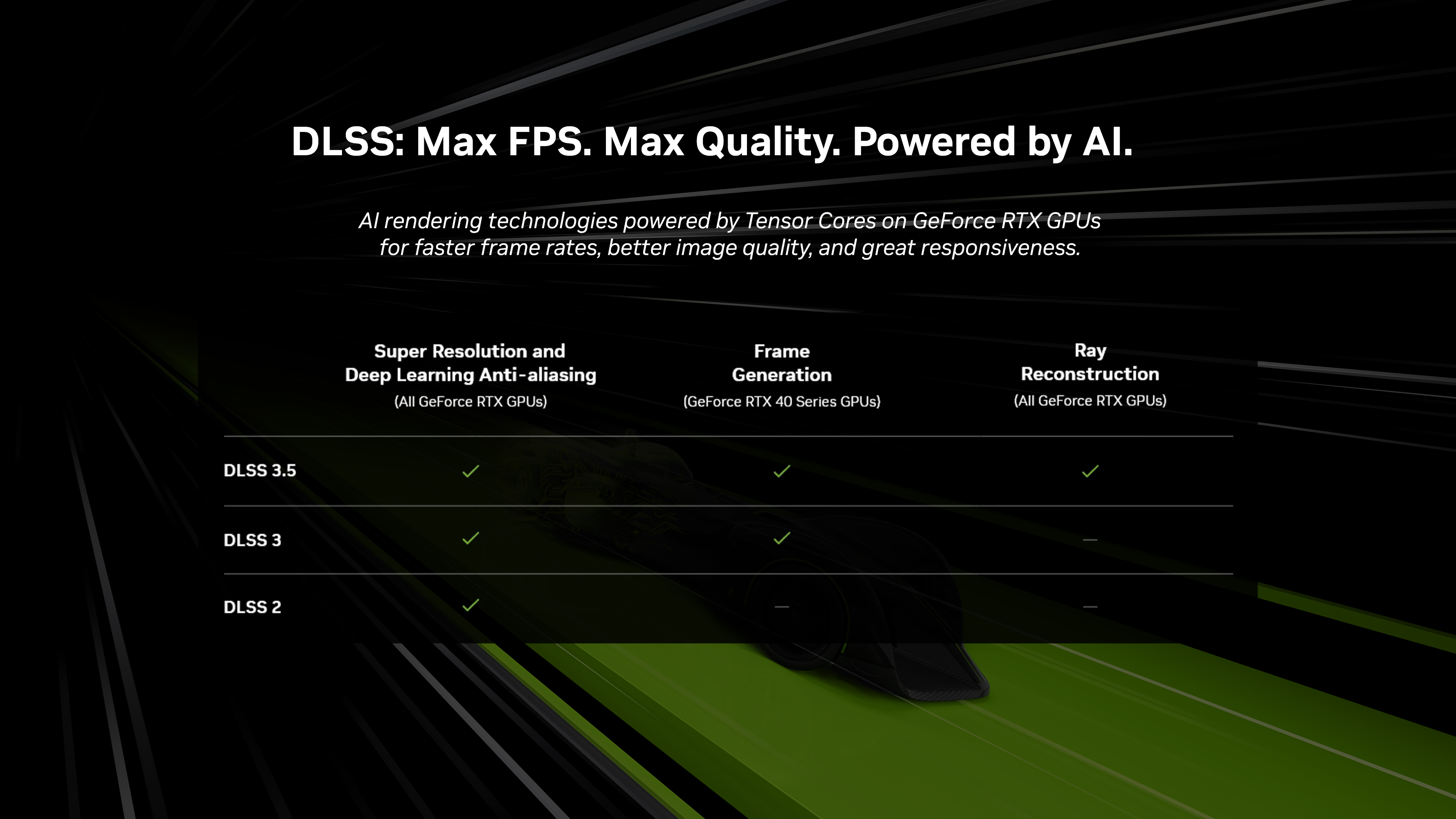

By now most GPU owners should be familiar with DLSS, an upscaling technique that renders a game at a lower resolution then upscales to a higher resolution using temporal information, motion vectors, and an AI super resolution model. NVIDIA DLSS revolutionized graphics by using AI super resolution and Tensor Cores on GeForce RTX GPUs to boost frame rates while delivering crisp, high quality images that rival native resolution. Since the release of DLSS, 216 games and apps have incorporated the technology, providing faster frame rates and the performance headroom to make real-time videogame ray tracing a reality.

DLSS 2.0 is an excellent technology for gamers in that in delivers more performance than native resolution gaming with only a minor hit to visual quality – especially using the highest quality presets.

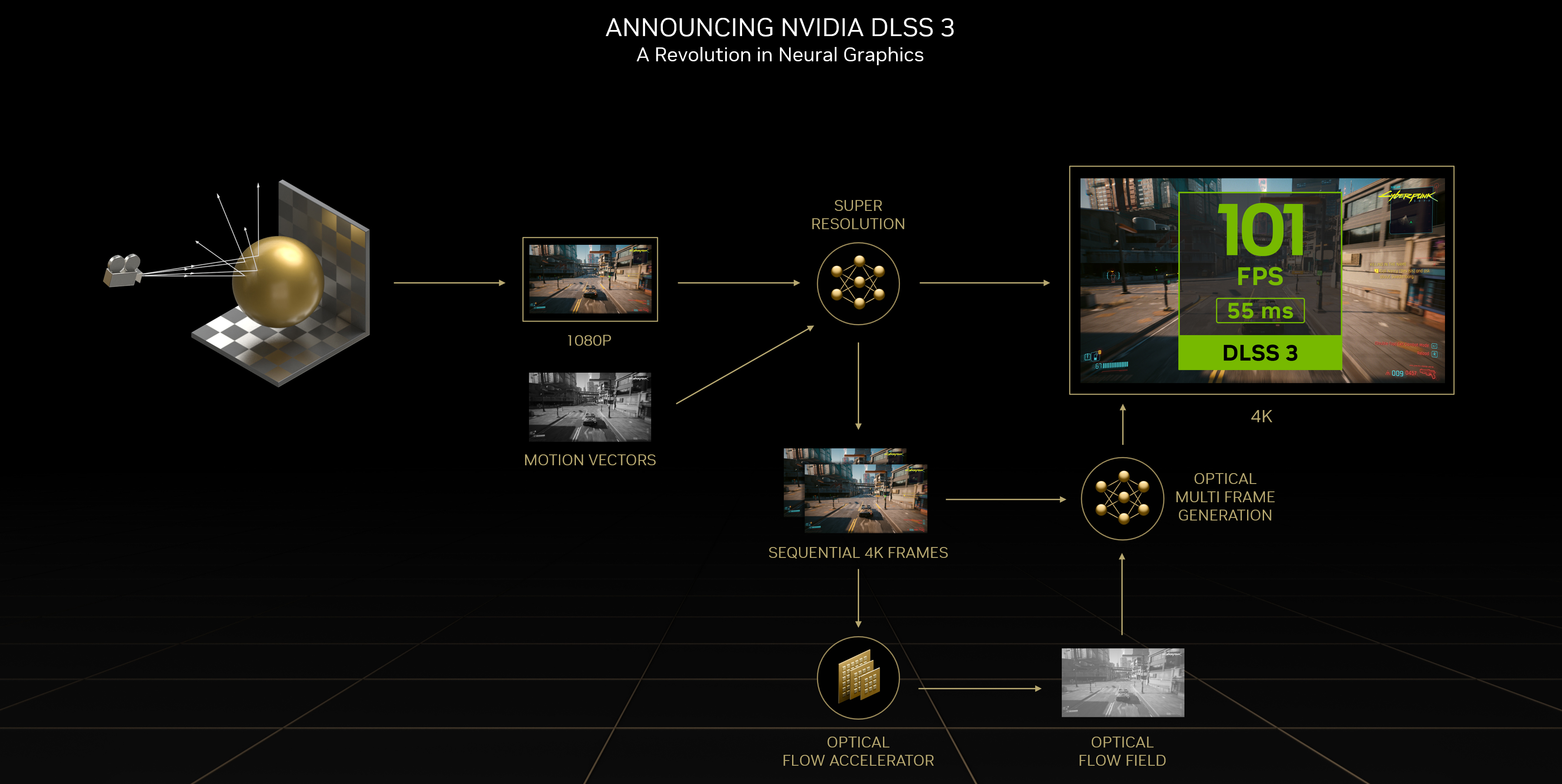

DLSS 3 takes this idea one step further by generating entirely new frames to slot between traditionally rendered frames. With DLSS 2, at least some portion of each frame shown was actually rendered by the game engine in some capacity, but with DLSS 3, Nvidia GPUs have gained the ability to create frames without any traditional rendering taking place. NVIDIA DLSS 3 brings incredible performance increases in many games and applications, and takes advantage of brand new AI technology exclusive to the RTX 40-series. The detailed and in-depth overview by Digital Foundry was a feast for the eyes (especially if those eyes belonged to a geeky gaming PC enthusiast), and although no FPS was mentioned in the demos and videos, it is clear to see how much improvement DLSS adds to games – absolutely in line with what NVIDIA has been promising.

DLSS 3 uses artificial intelligence to render a frame of graphics between two frames, which is a “temporal” version of what that frame would look like. It is not a perfect replica of the preceding or subsequent frame, but rather something in between. And this is done in real time, basically.

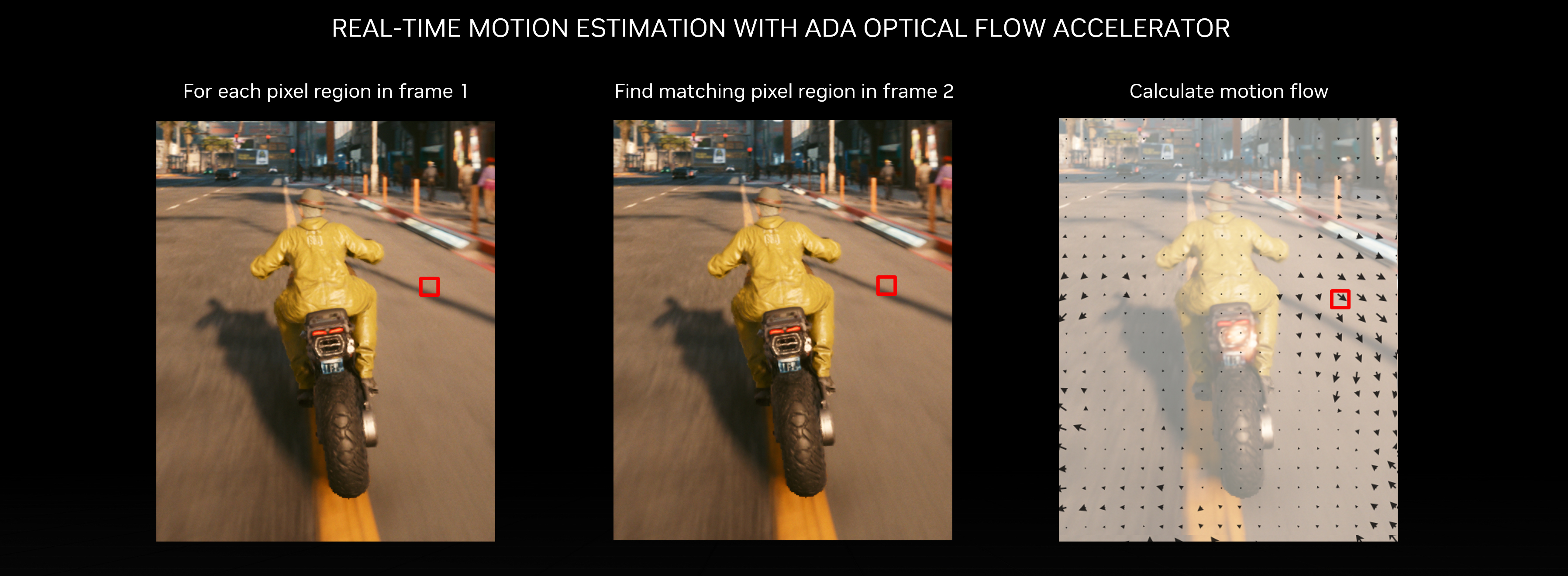

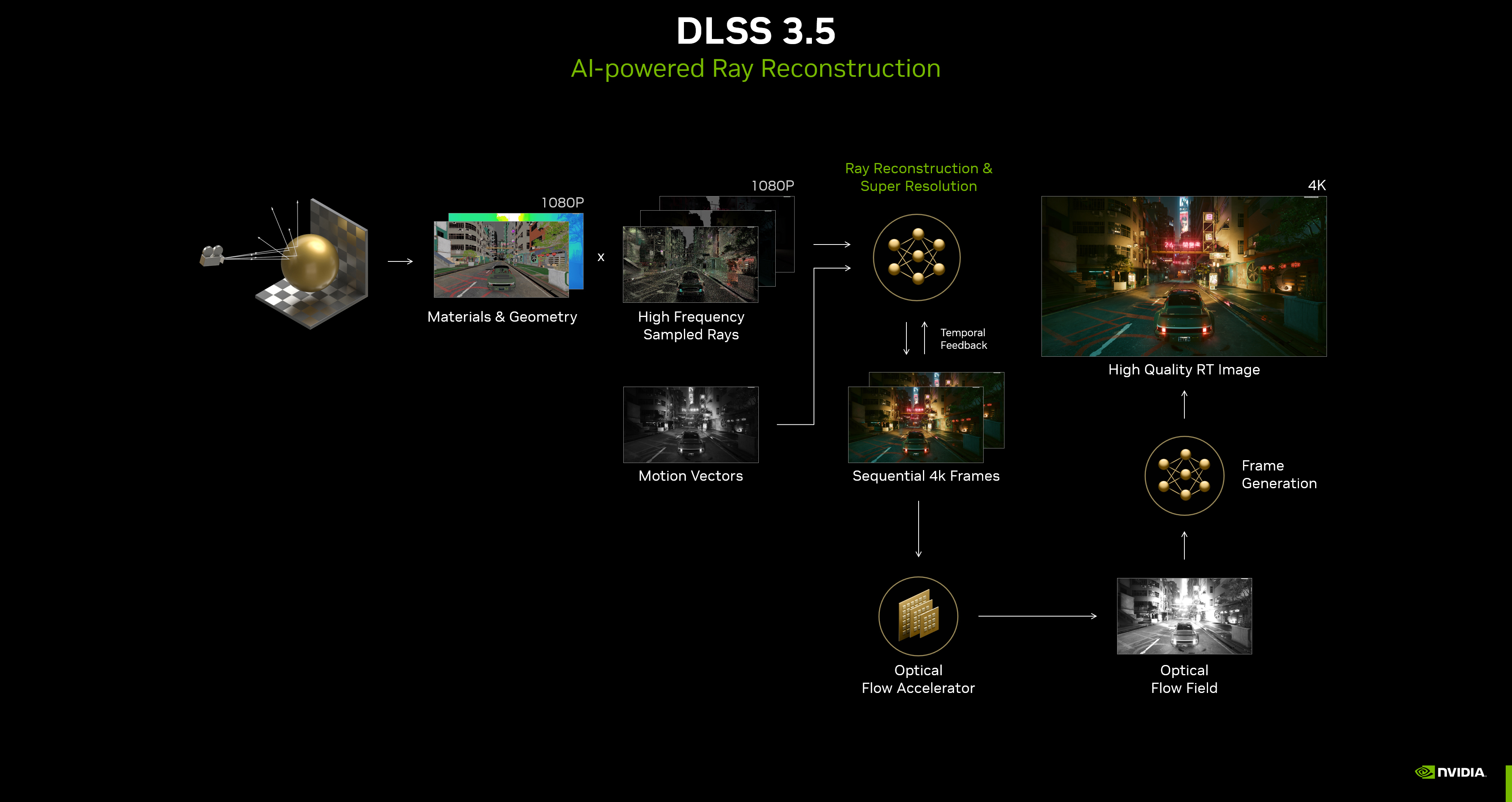

Ada’s Optical Flow Accelerator analyzes two sequential in-game frames and calculates an optical flow field. The optical flow field captures the direction and speed at which pixels are moving from frame 1 to frame 2. The Optical Flow Accelerator is able to capture pixel-level information such as particles, reflections, shadows, and lighting, which are not included in game engine motion vector calculations. In the motorcycle example below, the motion flow of the motorcyclist accurately represents that the shadow stays in roughly the same place on the screen with respect to their bike.

With DLSS 3 enabled, AI is reconstructing three-fourths of the first frame with DLSS Super Resolution, and reconstructing the entire second frame using DLSS Frame Generation. In total, DLSS 3 reconstructs seven-eighths of the total displayed pixels.![]()

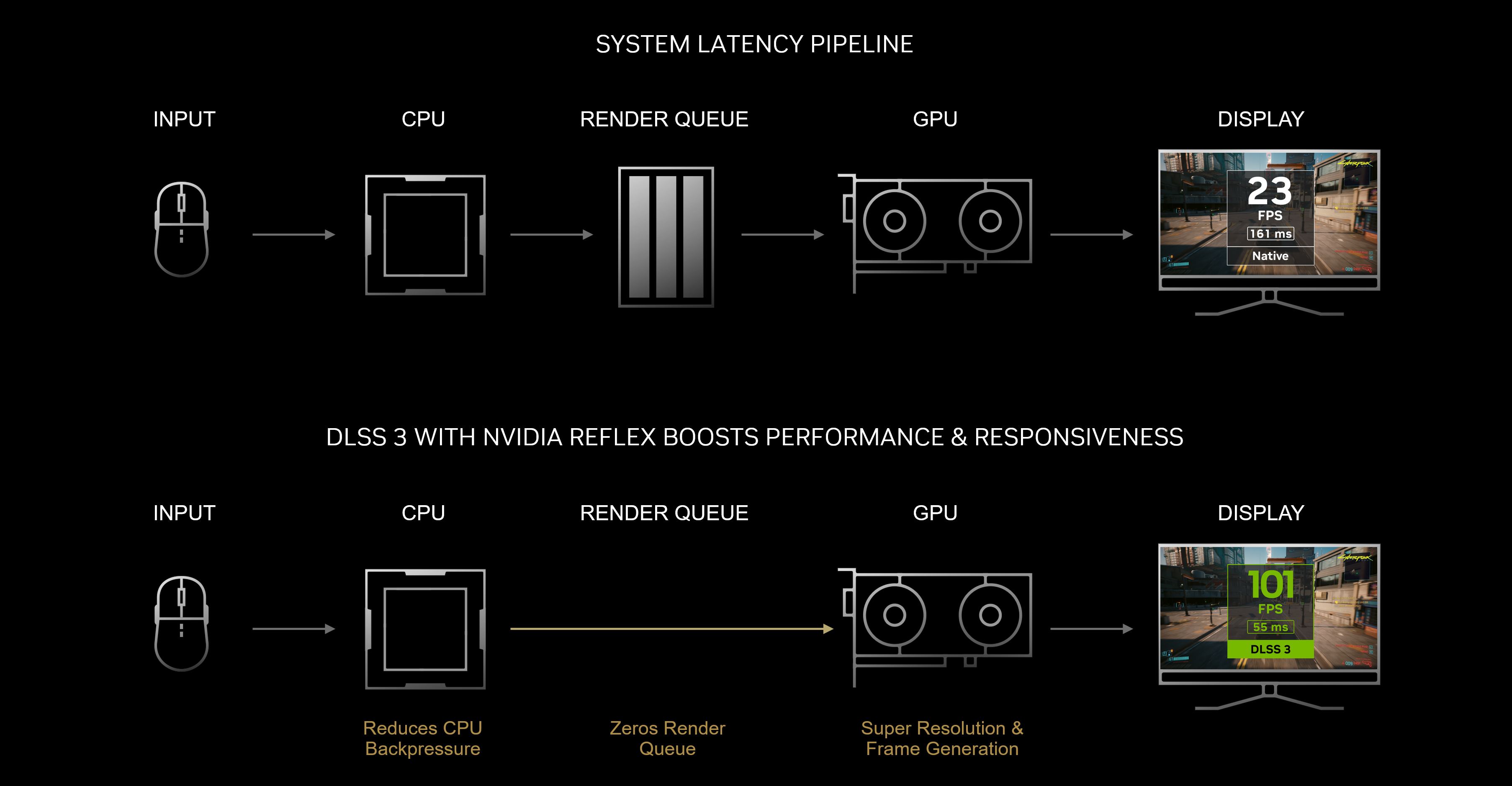

DLSS 3 also incorporates NVIDIA Reflex, which synchronizes the GPU and CPU, ensuring optimum responsiveness and low system latency. Lower system latency makes game controls more responsive, and ensures on-screen actions occur almost instantaneously once you click your mouse or other control input. When compared to native, DLSS 3 can reduce latency by up to 2X.

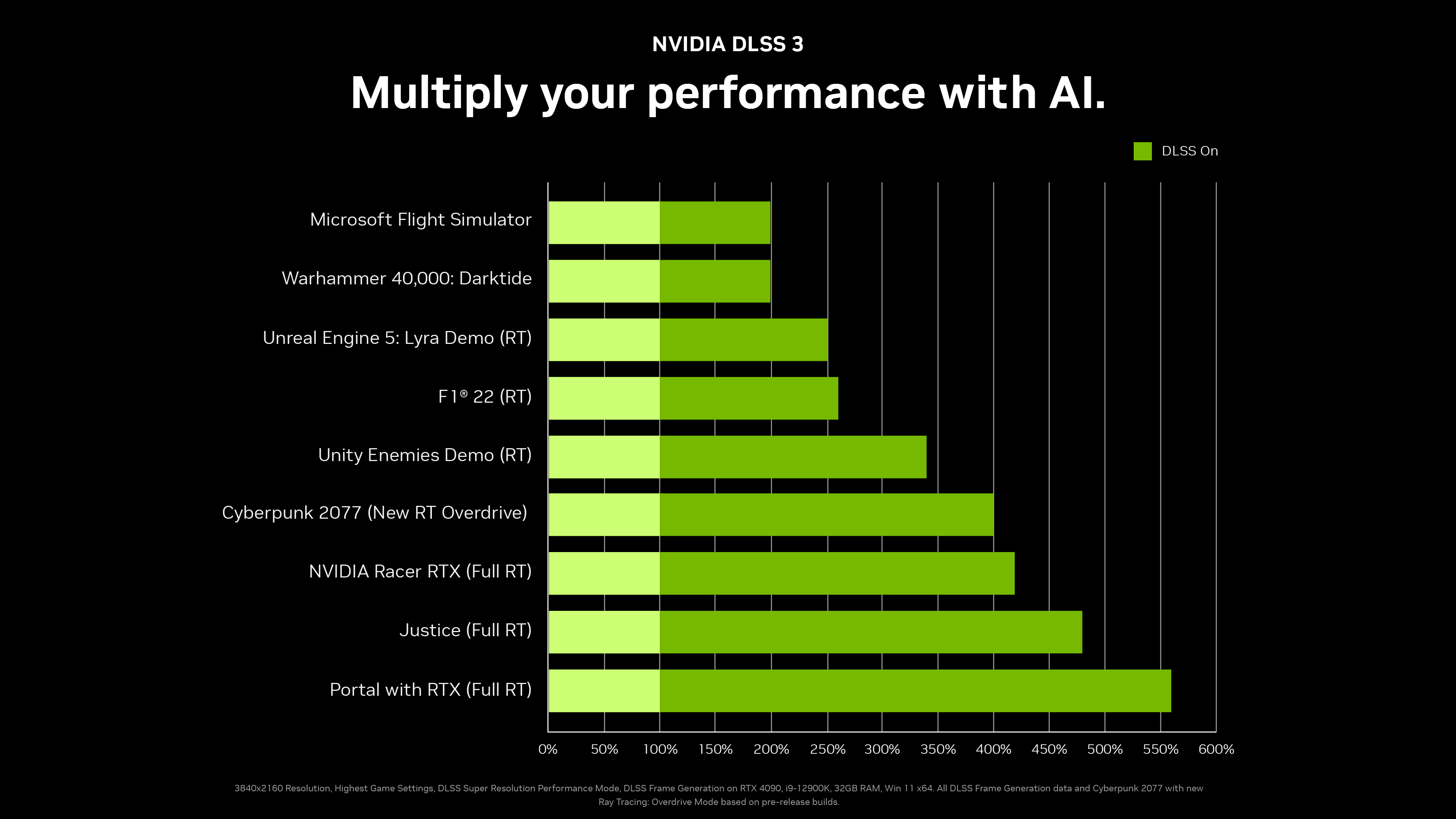

Across a set of games and engines, DLSS 3 helps increase GeForce RTX 40 Series performance by up to 4X compared to traditional rendering:

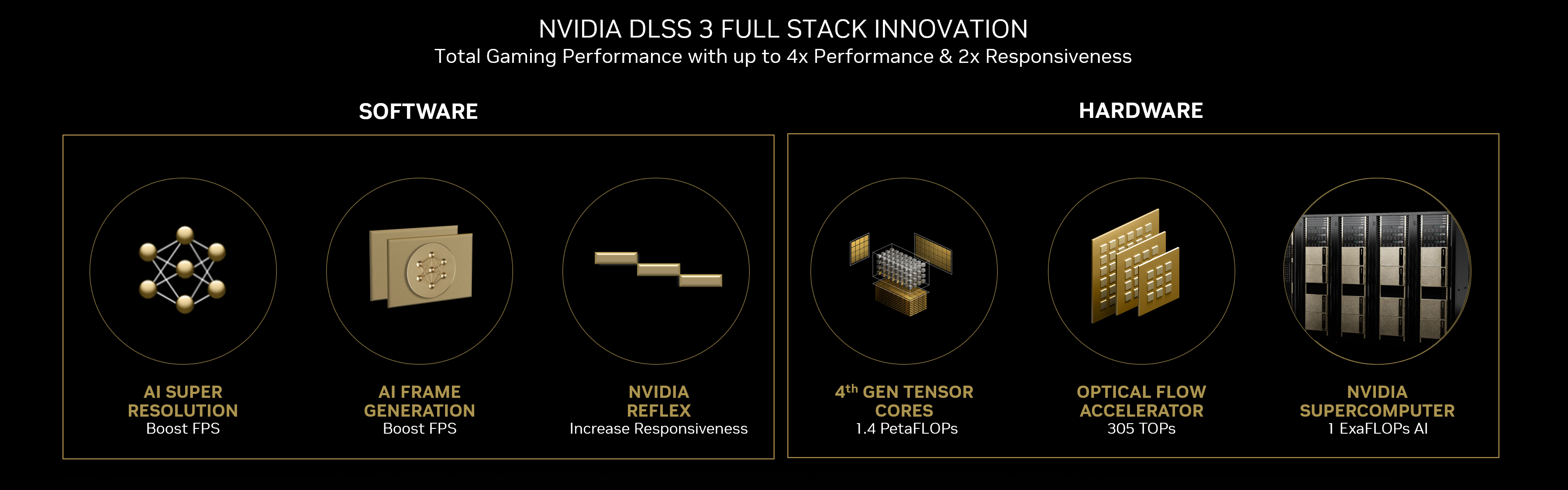

DLSS 3 delivers total gaming performance, advanced AI networks and Reflex software algorithms, dedicated Tensor Core and Optical Flow hardware, and an NVIDIA Supercomputer that continuously trains and improves AI networks. GeForce RTX 40 Series users get faster frame rates, quick responsiveness, and great image quality, which is only possible through full stack innovation.

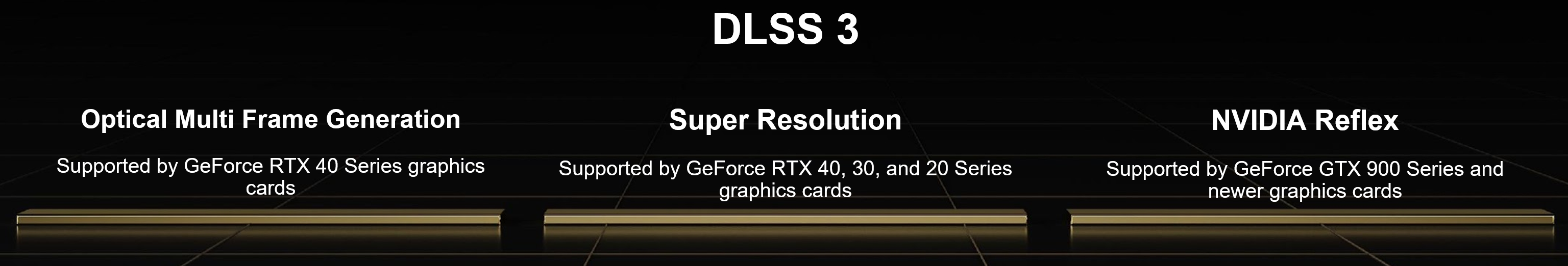

DLSS 3 technology is supported on GeForce RTX 40 Series GPUs. It includes 3 features: our new Frame Generation tech, Super Resolution (the key innovation of DLSS 2), and Reflex. Developers simply integrate DLSS 3, and DLSS 2 is supported by default. NVIDIA continues to improve DLSS 2 by researching and training the AI for DLSS Super Resolution, and will provide model updates for all GeForce RTX gamers, as we’ve been doing since the initial release of DLSS.

Up until now, DLSS has been all about taking your games, rendering them at low resolutions, and then, using the Tensor cores in NVIDIA's RTX graphics card, upscaling them into a higher resolution. This allows for lower-end graphics cards to run games in 2K or 4K resolutions at decent framerates. While the initial iteration of DLSS was a bit rough around the edges, it got improved dramatically with time, and the quality of DLSS-boosted games, depending on your settings, is pretty much identical to the naked eye compared to native rendering.

With DLSS 3, though, compared to DLSS 2, the feature is now being transformed into a wider, performance-boosting solution that uses more than one AI party trick to boost your frame rates. DLSS 3 adds a new feature called "Optical Multi Frame Generation" that, in short, can generate whole frames using AI.

In addition to upscaling existing frames, DLSS 3 will generate intermediate frames for your games. It'll analyze two in-game frames, generate an optical flow field, closely look at all elements within a game, and using that information, generate an all-new frame to put between those two. This allows for a dramatic boost in performance. DLSS 3 also incorporates NVIDIA Reflex, an ultra-low-latency solution to make your games way more responsive.

DLSS 3 will allow budget gamers (as long as they have a compatible GPU, of course) to dramatically increase their frame rates while also bumping their resolution up. Super Resolution alone was already making miracles happen, and with Frame Generation promising up to 4X frame rate increases, it should be way better --- it might finally make 2K/4K gaming attainable for everyone. Ada Lovelace-based budget GPUs could have amazing performance despite their unassuming specifications, all thanks to DLSS 3.

Since its initial release, the AI models behind DLSS have kept learning, leading to even better results and new innovations that further multiply performance:

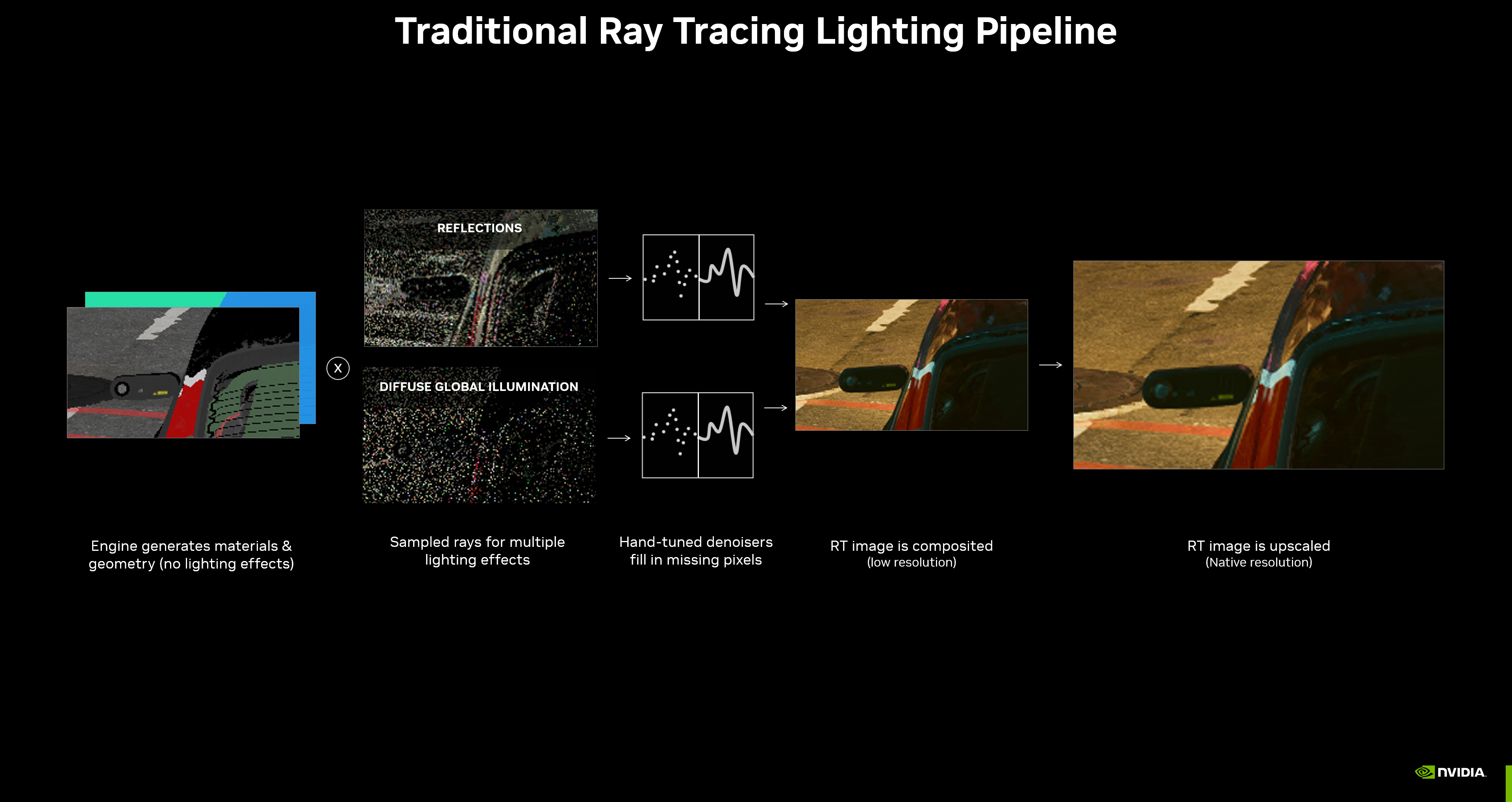

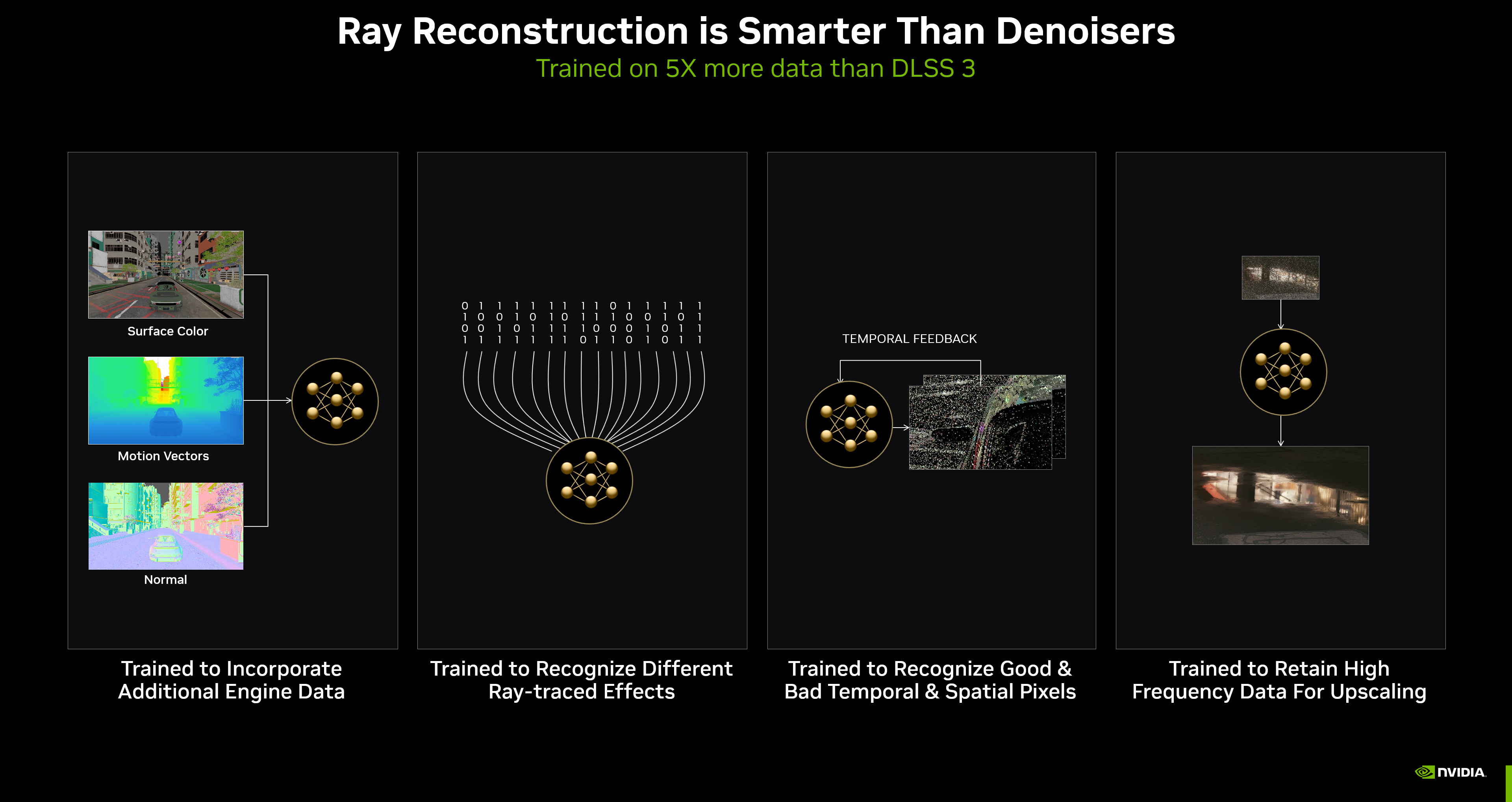

To appreciate the benefits of Ray Reconstruction, let’s look at how ray tracing works.

First, a game engine generates the geometry and materials of a scene, all of which have physically based attributes that affect their appearance and how light interacts with them. A sample of rays are then shot from the camera’s viewpoint, determining the properties of light sources in a scene and how light reacts when it hits materials. For instance, if rays strike a mirror, reflections are generated.

However, shooting rays for every pixel on your screen is too computationally demanding, even for offline renderers that calculate scenes over the course of several minutes or hours. So instead, ray samples must be used - these fire a handful of rays at various points across the scene for a representative sample of the scene’s lighting, reflectivity and shadowing.

The output is a noisy, speckled image with gaps, good enough to ascertain how the scene should look when ray-traced.

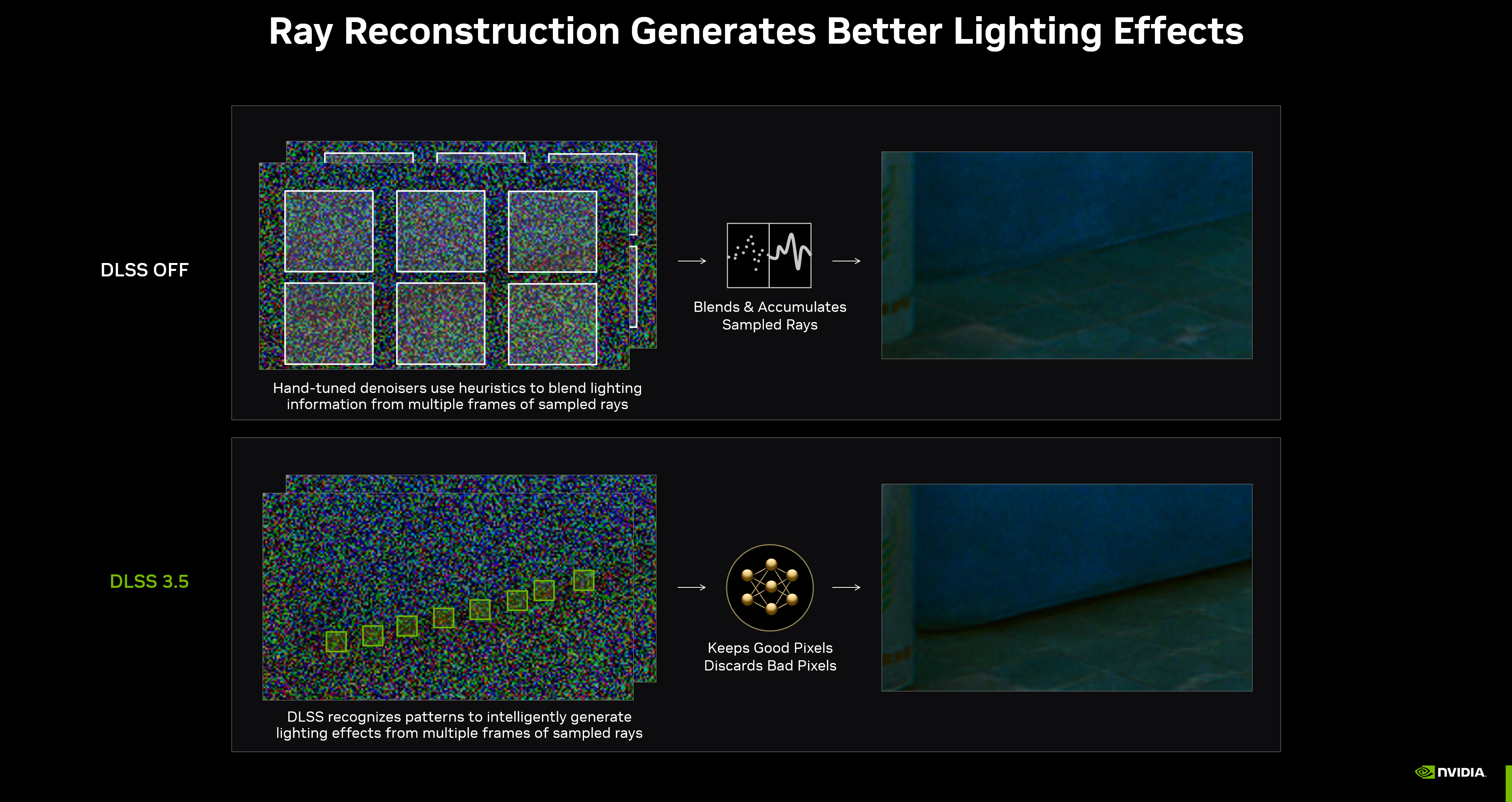

To fill-in the missing pixels that weren’t ray-traced, hand-tuned denoisers use two different methods, temporally accumulating pixels across multiple frames, and spatially interpolating them to blend neighboring pixels together. Through this process, the noisy raw output is converted into a ray-traced image.![]()

These denoisers are manually-tuned and processed for each type of ray-traced lighting present in a scene, adding complexity and cost to the development process, and reducing the frame rate in highly ray-traced games where multiple denoisers operate simultaneously to maximize image quality.

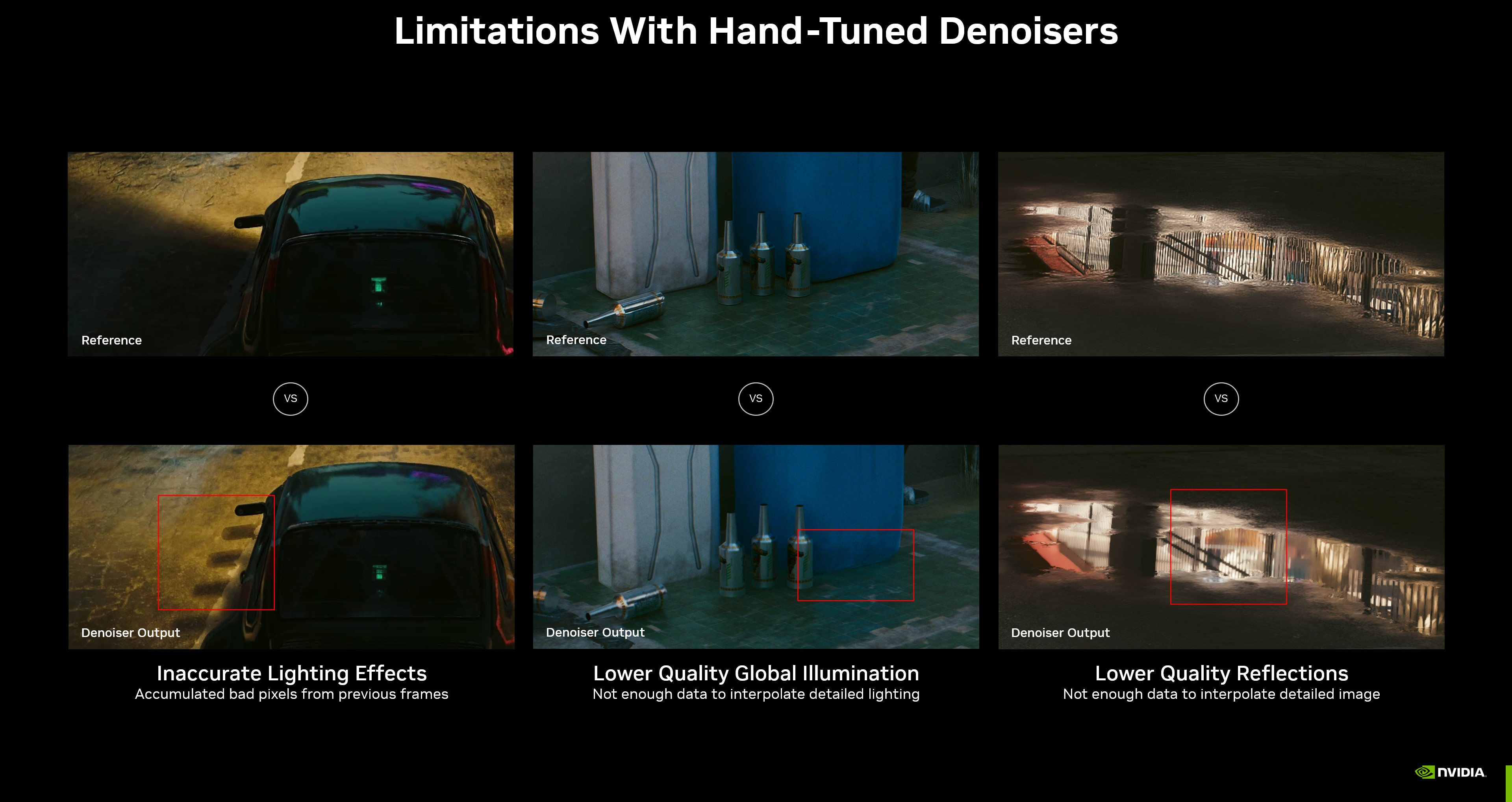

Each hand-tuned denoiser accumulates pixels from multiple frames to increase detail, in effect stealing rays from the past, but at the risk of introducing ghosting, removing dynamic effects, and reducing the quality of others. It also interpolates neighboring pixels, and blends this information together, but at the risk of blending away too much detailed information, or not blending enough and creating non-uniform lighting effects.

Upscaling is the last stage of the ray-traced lighting pipeline, and is key to experiencing the most detailed and demanding games at fast frame rates. But with denoising removing or decreasing the quality of effects, the limitations of hand-tuned denoisers are amplified, removing fine detail (referred to as high-frequency information) that upscalers use to output a crisp, clean image.

The solution: NVIDIA DLSS 3.5. Our newest innovation, Ray Reconstruction, is part of an enhanced AI-powered neural renderer that improves ray-traced image quality for all GeForce RTX GPUs by replacing hand-tuned denoisers with an NVIDIA supercomputer-trained AI network that generates higher-quality pixels in between sampled rays.

Trained with 5X more data than DLSS 3, DLSS 3.5 recognizes different ray-traced effects to make smarter decisions about using temporal and spatial data, and to retain high frequency information for superior-quality upscaling.

Trained using offline-rendered images, which require far more computational power than can be delivered during a real-time game, Ray Reconstruction recognizes lighting patterns from training data, such as that of global illumination or ambient occlusion, and recreates it in-game as you play. The results are superior to using hand-tuned denoisers.

In the following scene from Cyberpunk 2077, the inaccurate headlight illumination which surrounds the car is a result of the hand-tuned denoiser pulling in inaccurate lighting effects from previous frames. DLSS 3.5 accurately generates lighting, so you can make out the beam of the headlights, and see light reflect on the curb in front of the car.

The streets of Cyberpunk 2077’s Night City are filled with reflections from rotating billboards and neon lights. By activating DLSS 3.5, their quality and clarity is vastly improved city wide:

Creative applications have a wide variety of content that are difficult for traditional denoisers as they require hand-tuning per scene. As a result, when previewing content you get suboptimal image quality. With DLSS 3.5, the AI neural network is able to recognize a wide variety of scenes, producing high quality images during preview and before committing hours to a final render. D5 Render, an industry-leading app for architects and designers, will be available with DLSS 3.5 this fall.

NVIDIA DLSS 3.5 further improves image quality for ray-traced effects by replacing multiple hand-tuned denoisers with Ray Reconstruction (RR).

Combining Super Resolution, Frame Generation and Ray Reconstruction, DLSS 3.5 multiplies Cyberpunk 2077 frame rates by a total of 5X compared to native 4K DLSS OFF rendering.

GeForce RTX 40 Series users can combine Super Resolution and Frame Generation with Ray Reconstruction for breathtaking performance and image quality, while GeForce RTX 20 and 30 Series users can add Ray Reconstruction to their AI-powered arsenal alongside Super Resolution and DLAA.

Ray Reconstruction is a new option for developers to improve image quality for their ray-traced titles and is offered as part of DLSS 3.5. Rasterized games featuring DLSS 3.5 also include our latest updates to Super Resolution and DLAA, but will not benefit from Ray Reconstruction due to the lack of ray-traced effects.

Source: https://www.nvidia.com/en-us/geforce/news/nvidia-dlss-3-5-ray-reconstruction/

Source: https://www.howtogeek.com/837011/what-is-dlss-3-and-can-you-use-it-on-existing-hardware/

Source: https://www.nvidia.com/en-us/geforce/news/dlss3-ai-powered-neural-graphics-innovations/

Source: https://www.chillblast.com/blog/what-is-dlss-3-and-is-it-worth-it